Gen5 QLC client SSDs, enterprise LPDDR5X with RAIDDR ECC, and HBM4-era AI memory concepts anchor a week of announcements.

Some of the biggest memory companies at CES 2026 conveyed a unified message about storage and DRAM: modern systems spend too much time and energy moving data and not enough simply computing. Micron, Cadence, and SK Hynix each arrived with a different solution to this problem.

Micron is pushing PCIe Gen5 performance and high capacity into compact client form factors using QLC NAND, while Cadence is making LPDDR5X viable in servers by closing the reliability gap with DDR5. SK Hynix, meanwhile, used CES to show where AI memory is heading next, from denser HBM stacks to memory-side compute concepts meant to reduce data movement.

Micron 3610 Targets Thin-and-Light PCs

Micron’s main announcement was the Micron 3610 NVMe SSD, which it describes as the world’s first PCIe Gen5 SSD built with its G9 QLC NAND for client computing. The 3610 addresses a major pain point for thin laptops and small-form-factor systems that require the responsiveness of Gen5 storage while also needing higher capacities within a limited board area.

Micron calls the 3610 SSD the "world’s first Gen5 G9 QLC client SSD." Image used courtesy of Micron

The 3610 boasts up to 11,000 MB/s sequential reads and 9,300 MB/s sequential writes, with up to 1.5 M random read IOPS and 1.6 M random write IOPS. The 3610’s form factor is arguably the most consequential spec for OEM design teams; Micron says it offers the world’s only 4-TB capacity in a single-sided M.2 2230 card, aimed at ultra-thin laptops and AI-capable devices.

Micron, which recently announced the closure of its Crucial consumer business, is leaning incredibly hard on efficiency with the 3610, which uses a DRAM-less architecture with host memory buffer support and DEVSLP low-power states. Micron says this architecture improves performance per watt by 43% compared to Gen4 TLC in its own comparisons. The company also cites user-facing gains, including up to 30% better scoring and 28% higher bandwidth than Gen4 QLC SSDs in PCMark 10 testing. Micron also claims it can load a 20-billion-parameter AI model in under three seconds.

Cadence Brings DDR5-Class RAS to LPDDR5X

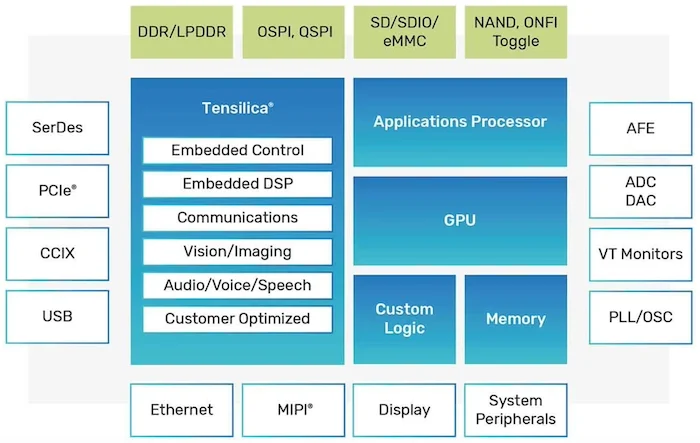

The growing interest in LPDDR5X for data centers, driven by its power advantages, is hitting reliability limits. Hyperscalers want LPDDR’s power, performance, and area benefits, but they also want the reliability, availability, and serviceability associated with DDR5. Cadence believes that you should not have to choose between the two.

The company introduced what it calls the industry’s first LPDDR5X 9600-Mbps memory IP “system solution” designed specifically for enterprise and data center applications with high reliability, integrating Cadence LPDDR5X IP with Microsoft’s RAIDDR ECC coding schema. Cadence has announced Microsoft as the first customer to deploy the solution.

Overview of Cadence's memory and storage IP solutions. Image used courtesy of Cadence

RAIDDR is described as a next-generation ECC algorithm that achieves close to single-device data correction (SDDC) with minimal logic overhead, delivering protection equivalent to symbol-based ECC typically used in DDR5 RDIMM-class implementations. Cadence says the solution offers sideband ECC performance comparable to traditional DDR5 ECC implementations while preserving LPDDR5X PPA in a compact form factor.

Cadence’s feature list details support for 40-bit channels using LPDDR5X DRAM, sideband ECC for maximum channel bandwidth, and the 9600-Mbps data rate target. The design target is clearly stated as AI training and inference infrastructure, where memory, power, and reliability frequently serve as system-level constraints.

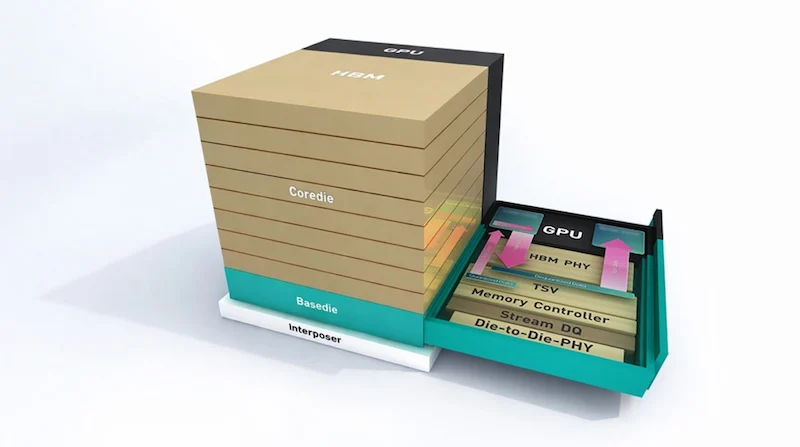

SK Hynix Highlights HBM4 Density

HBM4 naturally came up, with SK Hynix using CES 2026 to spotlight the next phase of AI memory scaling: more bandwidth, more capacity per package, and more logic moving into the memory subsystem. At its customer exhibition booth, the company showcased a 16-layer HBM4 product with 48 GB, positioned as the next step from its 12-layer HBM4 with 36 GB (which demonstrated 11.7 Gbps).

Alongside that, SK Hynix exhibited a 12-layer HBM3E with 36 GB, which it framed as a key volume driver for 2026, and it also put SOCAMM2 on display as a low-power memory module tailored for AI servers. The booth lineup extended into LPDDR6 for on-device AI and a 321-layer, 2-Tb QLC NAND product aimed at ultra-high-capacity enterprise SSDs.

SK Hynix's Custom HBM (cHBM). Image used courtesy of SK Hynix

SK Hynix also set up an "AI System Demo Zone" at CES, where visitors could test the company's concepts to reduce data movement. That included custom HBM (cHBM), which shifts some functions to the HBM base die, cutting transfer power and facilitating processing in memory. SK Hynix also demonstrated a prototype accelerator card based on its GDDR6 AiM concept, along with compute-enabled CXL memory module ideas for pooled memory expansion.