New AI upscaling tech points to a 2026 mobile graphics future driven by on-chip inference.

For mobile GPU designers, delivering performance isn’t just about pixels anymore. With gaming visuals growing more cinematic and displays pushing higher frame rates, the industry is turning to machine learning not just to enhance graphics, but to generate them more efficiently. Arm has now stepped firmly into that future.

In a newly announced roadmap, Arm revealed its plan to embed dedicated neural processing units (NPUs) inside future GPUs, beginning with a 2026 target for production silicon. The goal isn’t just faster AI, but AI that lives natively inside the graphics pipeline.

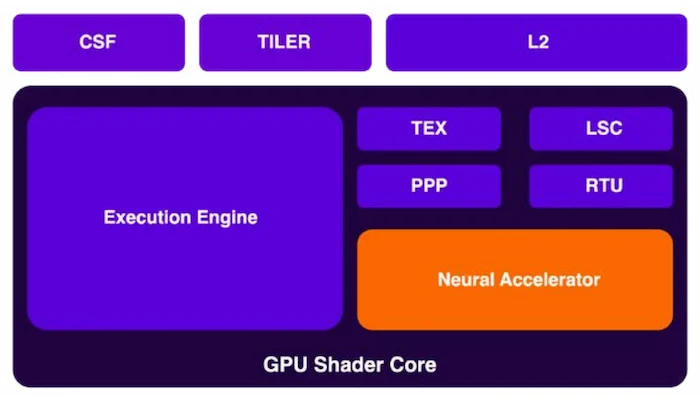

Arm is adding dedicated neural accelerators to Arm GPUs to bring AI-powered graphics to mobile and lay the foundation for future on-device AI.

Alongside this hardware vision, Arm also introduced Neural Super Sampling (NSS), a software-based AI upscaling engine that enhances frame quality while reducing GPU load. Together, they represent a shift: not just supporting AI workloads on mobile, but architecting GPUs around them.

Making AI a Native GPU Feature

Traditional GPU pipelines were never designed with machine learning in mind. While general-purpose compute shaders and ML frameworks can enable inference on existing hardware, the memory access patterns and latency demands of neural workloads often make these approaches inefficient, especially on power-constrained mobile devices. Arm’s 2026 architecture will address this by tightly coupling a neural execution engine with the graphics core itself.

The company hasn’t yet disclosed full silicon details, but the current prototype system—built on an Arm Mali GPU platform with offloaded ML execution—already demonstrates key advantages. A purpose-built Neural Shader Scheduler (NSS) coordinates between the GPU and NPU, offloading inference tasks such as motion estimation, upscaling, and frame reconstruction. This division of labor not only cuts total power draw but also avoids GPU stalls caused by unpredictable ML compute.

Arm says the neural accelerator will come to play a crucial role in future Arm GPUs.

The development platform also features dedicated memory interfaces to reduce copy overhead, as well as support for popular ML runtimes like TensorFlow Lite and ONNX. According to Arm, the future hardware will natively support streaming inference from game engines in real-time, a feature that paves the way for AI-enhanced rendering techniques beyond upscaling, such as DLSS-style anti-aliasing, denoising, or even texture generation.

What Makes NSS Different

Upscaling is not new. But where classical techniques like bilinear or bicubic interpolation rely on deterministic math, neural methods learn context, understanding how to reconstruct lost detail based on patterns seen during training. Arm’s NSS leverages a lightweight convolutional network trained on game-like content, capable of 2x and 4x upscaling while preserving temporal coherence.

In early demos, the NSS reconstructed 1080p frames from native 540p input with minimal perceptual loss, and did so fast enough to enable real-time inference on current-generation mobile NPUs. Unlike server-side AI enhancements used in cloud gaming, NSS is entirely local, meaning players retain full visual fidelity even during connectivity drops or offline play. And because the model is optimized for Mali GPUs and Arm’s Ethos-N series NPUs, it’s built to scale across a wide range of SoC configurations.

The NSS engine also opens new territory for developers. Arm’s prototype Unity plug-in allows real-time swapping between native and upscaled frames, with customizable inference parameters exposed to the game engine. That kind of control turns AI from a static image enhancer into a dynamic part of the gameplay pipeline, adaptable to factors like performance budgets and battery levels.

Still Early Days

While the 2026 GPU roadmap is still over a year out from final silicon, the NSS initiative is already influencing software workflows. Arm is working with ecosystem partners—including game engine vendors, SoC designers, and AI toolchain providers—to develop a standardized ML graphics stack that supports training, deployment, and tuning of neural render models.

The implications go beyond gaming. By giving developers hardware-accelerated inference inside the graphics path, Arm is effectively collapsing the distinction between GPU and AI accelerator. In AR/VR, this could mean smarter scene reconstruction. In mobile photography, faster denoising. And in everyday UI rendering, battery-conscious AI compositing that adapts to ambient light or user focus.

Arm’s message is clear: if AI is going to shape the future of mobile graphics, it can’t be bolted on; it has to be baked in. And with NSS and next-gen GPU-NPU integration, the company is betting that the next leap in visual quality won’t come from brute force rendering, but from intelligent prediction at the edge.

All images used courtesy of Arm.