Kinara Aims Its Tiny Ara-2 Processor at Big Generative Edge AI Workloads

Boasting a cost-effective approach, today Kinara is rolling out its new edge AI processor designed to take on the demanding workloads brought on by generative AI.

Generative AI applications, like ChatGPT, have exploded onto the scene in the past 12 months. These workloads have proven to be very expensive and demanding, so naturally, they have found themselves mostly confined to cloud computing and data centers. However, now the industry is starting to see a push toward bringing these generative AI workloads to the edge.

To address this growing market space, today Kinara released its new Ara-2 processor, which is designed explicitly for generative AI applications at the edge. All About Circuits had the chance to talk with Ravi Annavajjhala, CEO of Kinara, to hear about the new processor firsthand.

In a tiny 17 mm × 17 mm EHS-FCBGA package, the Ara-2 chip is designed around 8 Gen-2 neural cores.

Neural-Optimized Instruction Set

The Kinara Ara-2 generative AI processor is a state-of-the-art chip designed for edge AI applications, emphasizing efficiency, performance, and versatility. Offered in a tiny 17 mm × 17 mm EHS-FCBGA package, the chip is designed around 8 Gen-2 neural cores. These cores are fully programmable compute engines with a neural-optimized instruction set. A major design consideration for these chips is power efficiency.

As such, Kinara added a new feature to the Ara-2 in its support for new data types, including Integer 4 and MSFP16. With support for these new datatypes, Ara-2 adds support for TensorFlow Lite and PyTorch pre-quantized networks, which broadens the chip's applicability across various AI models, allowing for more flexible and efficient data processing.

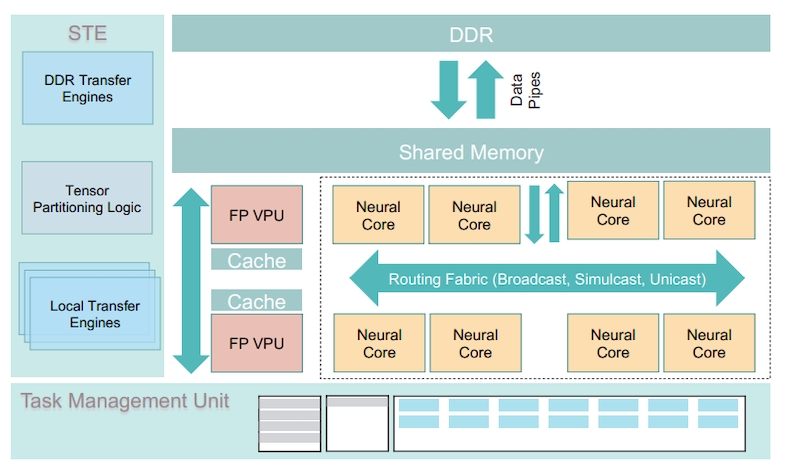

A system-level block diagram of the Ara-2

Moreover, the Ara-2 sees a big upgrade in memory capacity, with up to 16 GB of LPDDR4/DDR4X per chip. This has big implications for edge processing. "With 16 GB of LPDDR4 DRAM, a single Ara-2 can support up to 30 billion parameters in int4, meaning that it could run an entire large language model on the edge,” says Annavajjhala.

In terms of performance, the chip also offers benefits over its predecessor. Capable of generating a stable diffusion image in about 10 seconds per image, the new chip offers generative AI performance that is 5× to 8× better than the Ara-1. For vision models, the Ara-2 is capable of running Resnet50 with a 2 ms latency.

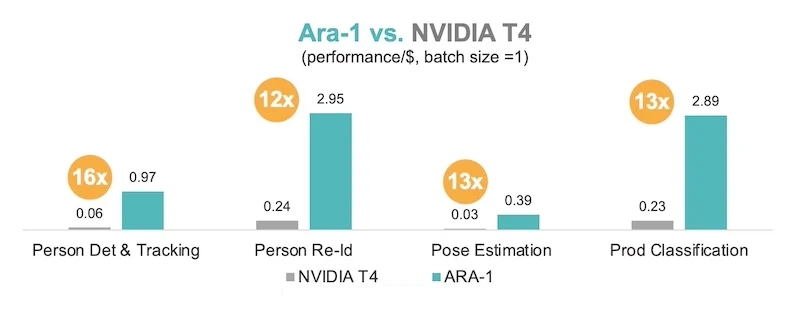

Comparison With Nvidia GPU

Comparatively, the Ara-2 aims to position itself as a more cost-effective and power-efficient alternative to conventional solutions like an Nvidia T4 GPU.

While the Ara-2 may not be able to compete with the Nvidia T4 family with respect to raw performance, it does claim to have wins with respect to performance per dollar and performance per watt. Kinara’s Ara-1 processor is already used by many of the top US retailers.

“The reason that many top US retailers choose us is because we lead the industry in total cost of ownership (TCO), and we also have the best computational efficiency in terms of performance per dollar,” says Annavajjhal.

The Ara-1 versus the Nvidia T4 in terms of performance per dollar

Part of this efficiency comes from two main architectural feats: dedicated dataflow engines and a unique AI compiler.

The dedicated dataflow engines enable software-defined Tensor partitioning and optimized routing for dataflow. This enables more efficient dataflow for any type of network architecture, resulting in lower power consumption and decreased latency.

The compiler, on the other hand, automatically determines the most efficient data and compute flow of any AI graph. This creates the most optimal execution plan for a given model, ensuring that performance and power are optimized.

Explaining the architecture, Annavajjhala says that It's all software controlled. “The data engines have the capability of taking any n-dimensional tensor partition and route it to the compute units in a very flexible manner,” he says. “That also means that the compiler becomes a very important piece of the solution because there are literally thousands of ways of mapping any neural problem to the chip.”

“Our compiler does an optimization pass, evaluates that entire search space, and comes up with the optimal way of partitioning each subcomputation in the neural graph, mapping it to the compute units. It figures out what the data transfers need to be and creates an entire schedule while making sure that data reuse is maximized and the need for data movement is minimized.”

In this way, Kinara can ensure that its hardware runs AI models with the most performance and power efficiency possible.

Bringing Generative AI to the Edge

As generative AI gains in popularity, its growth may be hindered by the excessive costs of paying for server hours. For the future of generative AI, Kinara believes that a transition to the edge must be made. With their Ara-2 processor (sampling now), the company hopes that they can accelerate this shift to the edge, providing users with affordable generative AI that doesn’t sacrifice on cost.

.jpg)

.jpg)